Stories

The University of Mannheim has 12,000 students and 1,600 employees. On the 11th floor of the RUM building, high above Mannheim Palace and the heads of the students, the university data centre has to achieve roughly the same performance level as the data centres of large-scale mail order companies such as KUKA or Zalando. A team of 15 people is responsible for guaranteeing the day-to-day operation of the data network, media technology and the infrastructure.

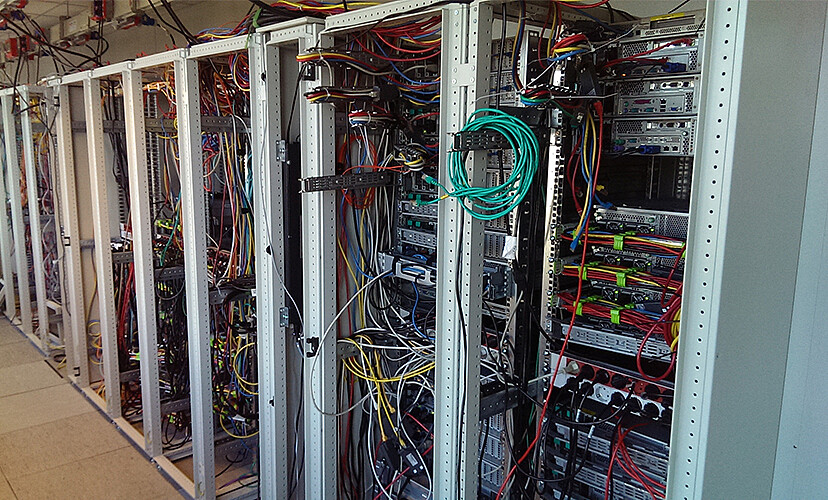

A special feature of the university data centre is its “supercomputer”, which lies in 80th place on the list of the world’s 100 fastest computers. Such a level of performance has to be secured. In 2010, Mannheim University wanted to upgrade the climate control technology in the machine room to cope with the increased performance. After a current status analysis, Ralf-Peter Winkens (head of the data network and media technology in the data centre) decided to redesign the entire data centre. Since August 2015, there have been a total of 53 racks and 21 side coolers in an area of 100 square metres. The extension of the data centre was entrusted to SCHAFER IT-Systems – and for good reason: “I first looked at a number of companies before choosing the SCHAFER concept. In 2010, the same company installed the coolers, but in a central cold aisle and not in the individual cabinets. Today, cold aisle enclosures are standard, so there are good reasons why this approach is better and more reliable”, says Winkens.

Directly under the roof, the paths for a three-part cooling system are short. One part is conventional cooling with a compressor installed directly on the roof of the building. An additional indirect, free cooling system of 20 m2 takes the ambient coldness from the air on the roof and cools the entire cycle on the way to the water-cooled LOOPUS Side Coolers. They then suck in warm air from the back of the room, cool it down and expel it into the enclosed, so-called, cold aisle. This way, high heat loads per rack can be dealt with and flexible, effective climate control achieved. The third element is a direct free cooling system that blows 8,800 m3/h of external air directly into the warm aisle section, thus relieving the compressor on the roof. In the event of a complete power outage, there is an emergency cooling system with a diesel power generator.

Parallel to this, an emergency data centre was commissioned on the campus. About 500 metres from the actual data centre, there are a number of other SCHAFER racks containing seven emergency data cabinets, temperature controlled by 3 side coolers. Besides the backup function, this is also where the source of the actual data centre performance can be found: here the university has its broadband connection to the BelWu, Baden-Wurttemberg’s extended LAN. That is the federal state’s scientific network, to which all universities, applied science colleges and over 1,000 schools are connected. At the same time, a separate dedicated line connects the emergency data centre to Germany’s main internet hub, the DE-CIX, in Frankfurt. So this is how the University of Mannheim’s data centre, with the help of SCHAFER IT-Systems, manages to keep a cool head and guarantee its own high performance.